The role of a SQL Database Administrator (DBA) has evolved significantly over the years as technology and business needs have advanced. This evolution can be traced back to the early days of databases and continues to adapt to the latest trends in data management. In this post, we will explore how the SQL Database Administrator position has changed over the years, focusing on key developments and shifts in responsibilities.

Early Days of SQL DBA (1970s-1980s)

The history of SQL databases can be traced back to the 1970s when the concept of relational databases was first introduced by Edgar F. Codd. During this era, SQL databases were primarily used by large organizations for data storage and retrieval. The role of a SQL DBA was relatively straightforward, involving tasks such as data modeling, schema design, and query optimization.

SQL DBAs in the early days were responsible for managing physical storage, ensuring data integrity, and optimizing database performance. They worked closely with developers to design efficient database schemas and tune SQL queries for better performance. However, the scope of their responsibilities was limited compared to what it would become in the future.

The Rise of Enterprise Databases (1990s)

The 1990s saw the proliferation of enterprise databases, with Microsoft SQL Server, Oracle, and IBM DB2 gaining popularity. This period marked the beginning of a significant shift in the role of SQL DBAs. As organizations increasingly relied on databases to store critical business data, SQL DBAs became more integral to the IT infrastructure.

During the 1990s, SQL DBAs were tasked with database installation, configuration, and maintenance. They had to ensure high availability and data backup strategies to prevent data loss. Additionally, security became a more prominent concern, with SQL DBAs responsible for implementing access controls and encryption to protect sensitive data.

Internet Boom and E-Commerce (Late 1990s-2000s)

The late 1990s and early 2000s witnessed the explosion of the internet and the rise of e-commerce. This had a profound impact on the role of SQL DBAs. Databases became the backbone of online applications, and uptime and scalability became paramount.

SQL DBAs had to adapt to the demands of 24/7 availability and handle large volumes of data. They were now responsible for performance tuning on a massive scale, employing techniques like indexing, caching, and partitioning to ensure fast query response times. Scaling databases horizontally and vertically to accommodate growing workloads became a common challenge.

Cloud Computing Era (2010s)

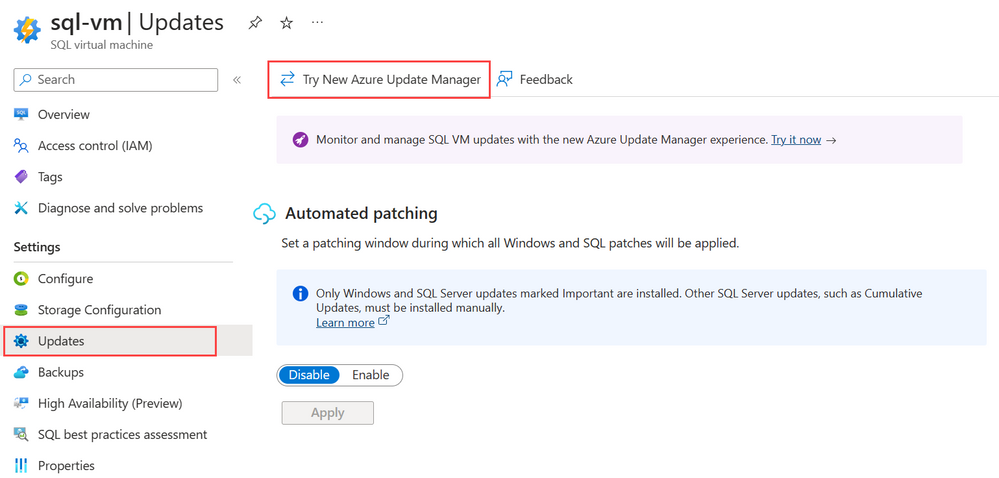

The 2010s brought about a significant transformation in the IT landscape with the advent of cloud computing. Cloud-based databases, such as Amazon RDS, Azure SQL Database, and Google Cloud SQL, became popular choices for organizations looking to reduce infrastructure costs and increase scalability.

SQL DBAs had to adapt to managing databases in the cloud, which introduced new challenges and opportunities. They had to master cloud-specific database services and learn how to optimize costs while maintaining performance and security. Automation and scripting also became crucial skills as cloud providers offered tools for infrastructure as code (IAC) and database management.

Data Explosion and Big Data (2010s-Present)

The explosion of data in the 2010s, driven by social media, IoT devices, and increased digitization, posed another major shift in the role of SQL DBAs. Traditional relational databases were no longer sufficient to handle the sheer volume of data being generated.

SQL DBAs had to adapt to the world of big data, which included technologies like Hadoop, NoSQL databases, and distributed data processing frameworks. They needed to understand when to use traditional SQL databases and when to leverage alternative solutions for specific use cases. This required a broader skill set and the ability to work with a variety of data storage and processing technologies.

Data Security and Compliance (2010s-Present)

With data breaches becoming more prevalent, data security and compliance became a top priority for organizations. SQL DBAs found themselves taking on additional responsibilities related to securing data, implementing encryption, and ensuring compliance with regulations such as GDPR and HIPAA.

SQL DBAs also had to stay updated on the latest security threats and vulnerabilities and implement best practices to protect databases from unauthorized access and data breaches. This aspect of the role required a deep understanding of cybersecurity principles and the ability to work closely with security teams.

Automation and DevOps (2010s-Present)

In recent years, automation and DevOps practices have transformed the way SQL DBAs work. DevOps principles emphasize collaboration between development and operations teams, with a focus on automating repetitive tasks and achieving continuous integration and continuous delivery (CI/CD).

SQL DBAs have embraced automation tools and scripting languages to streamline database deployment, configuration management, and monitoring. They now play a critical role in enabling the rapid release of database changes while maintaining stability and reliability. This shift has also led to a more proactive approach to database management, with DBAs actively participating in the development process.

Data Analysis and Business Intelligence (2010s-Present)

As organizations recognize the value of data-driven decision-making, SQL DBAs have expanded their roles to include data analysis and business intelligence (BI) tasks. They are now involved in creating data warehouses, designing data models for analytics, and supporting BI tools like Tableau, Power BI, and QlikView.

SQL DBAs work closely with data analysts and data scientists to ensure that data is available, accurate, and accessible for reporting and analysis. This shift highlights the need for SQL DBAs to have a broader understanding of the business context and the ability to translate data into actionable insights.

Machine Learning and AI Integration (2020s-Present)

The integration of machine learning (ML) and artificial intelligence (AI) into applications has further expanded the role of SQL DBAs. They are now tasked with managing databases that store and serve data for ML and AI models. This includes optimizing database performance for real-time inference, handling large datasets for training, and ensuring data quality for ML/AI algorithms.

SQL DBAs may also collaborate with data scientists to deploy ML models within databases and establish data pipelines that feed data to these models. This intersection of traditional database management and emerging technologies highlights the evolving nature of the role.

Conclusion

In conclusion, the role of a SQL Database Administrator has undergone significant changes over the years, reflecting advancements in technology, the growth of data, and evolving business needs. From its humble beginnings as a data storage and retrieval specialist, the SQL DBA has become a multifaceted professional responsible for ensuring the availability, performance, security, and strategic use of data within organizations.

As we move into the future, SQL DBAs will continue to adapt to emerging trends, including cloud-native databases, data analytics, AI/ML integration, and the evolving cybersecurity landscape. The ability to learn and evolve with the ever-changing technology landscape will remain a key characteristic of successful SQL DBAs, ensuring their continued relevance in the world of data management and IT infrastructure.